This sidecar can be of two types, streaming sidecar or logging agent sidecar. Sidecar patternĪlternatively, in the sidecar pattern a dedicated container runs along every application container in the same pod. You can see a diagram of this configuration in figure 1.įigure 1: A logging agent running per node via a DaemonSet 2. This logging agent is configured to read the logs from /var/logs directory and send them to the storage backend. Deploying a DaemonSet ensures that each node in the cluster has one pod with a logging agent running. In the DaemonSet pattern, logging agents are deployed as pods via the DaemonSet resource in Kubernetes. The two most prominent patterns for collecting logs are the sidecar pattern and the DaemonSet pattern. Now that we understand which components of your application and cluster generate logs and where they’re stored, let’s look at some common patterns to offload these logs to separate storage systems. If systemd is available on the machine the components write logs in journald, otherwise they write a. The other system components ( kubelet and container runtime itself) run as a native service. Some of the system components (namely kube-scheduler and kube-proxy) run as containers and follow the same logging principles as your application. The other source of logs are system components. These container logs can be fetched anytime with the following command: In a Kubernetes cluster, both of these streams are written to a JSON file on the cluster node. Your application runs as a container in the Kubernetes cluster and the container runtime takes care of fetching your application’s logs while Docker redirects those logs to the stdout and stderr streams. In a Kubernetes cluster there are two main log sources, your application and the system components.

Run filebeat on kubernetes how to#

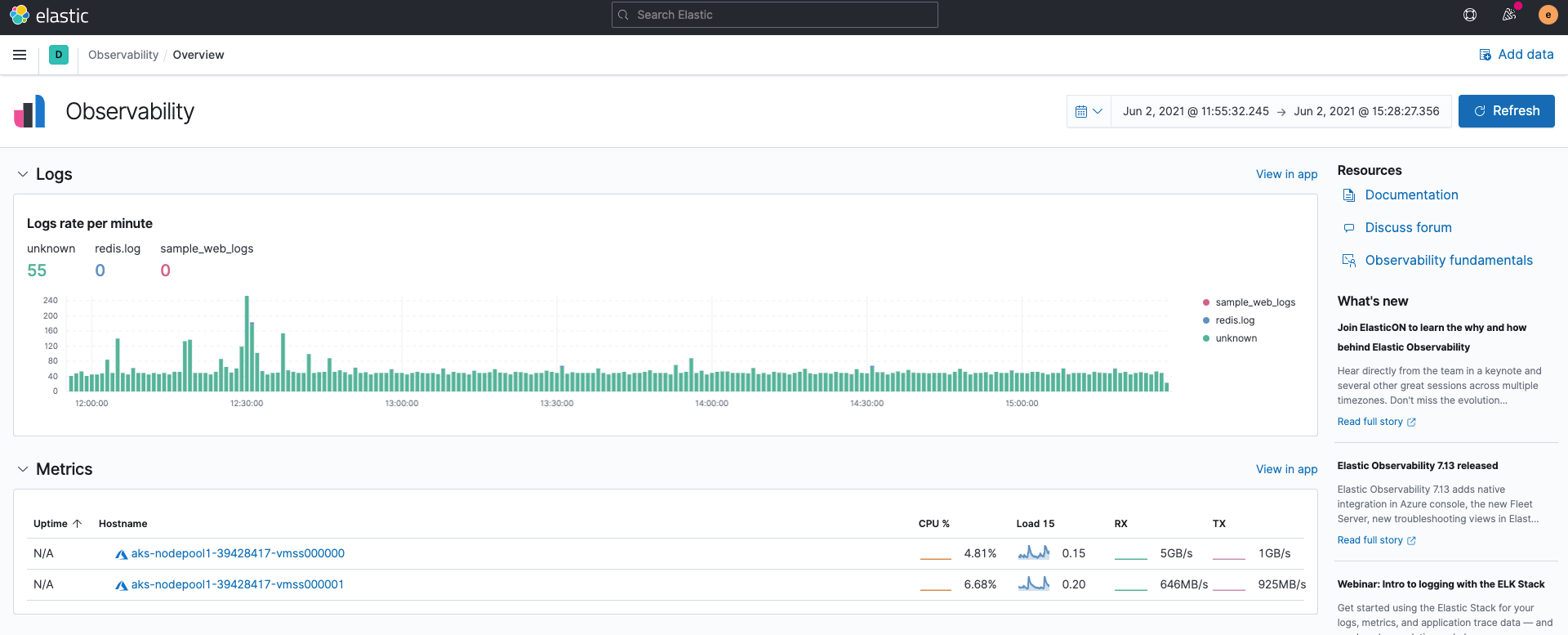

In this article we’ll discuss how to implement this approach in your own Kubernetes cluster. Storing logs off of the cluster in a storage backend is called cluster-level logging. These separate backends include systems like Elasticsearch, GCP’s Stackdriver, and AWS’ Cloudwatch. Logs are an effective way of debugging and monitoring your applications, and they need to be stored on a separate backend where they can be queried and analyzed in case of pod or node failures. As more companies run their services on containers and orchestrate deployments with Kubernetes, logs can no longer be stored on machines and implementing a log management strategy is of the utmost importance.

This worked on long-lived machines, but machines in the cloud are ephemeral. They never left the machine disk and the operations team would check each one for logs as needed. kubernetes//templates/configmap.Historically, in monolithic architectures, logs were stored directly on bare metal or virtual machines. name: -data-filebeat volume mount is used to share filebeat logsĪdd configmap to component to reference the filebeat.yml file that will be deployed to container (e.g. oom/kubernetes//resources/config/log/filebeat /filebeat.yml)Ī sample filebeat.yml can be found in the log demon node project: a=tree f=reference/logging-kubernetes/logdemonode/charts/logdemonode/resources/config/log/filebeat h=abf4f0a84ae7a15ed99fc4776727e4333948b583 hb=d882270162d56c55da339af8fb9384e1bdc0160dĪdd Filebeat container to deployment.yaml: This is the specification of the log format and location.Īdd filebeat.yml to resources (e.g.

This is the filebeat configuration file which determines the path to find log files and any other configuration of Filebeat Logging directory (verified) example hereįor the setup, we will add two resource files: This requires each pod to have an additional container which will run Filebeat, and for the necessary log files to be accessible between containers.Ī reference log demon node project exists that can be used as a guideline for the suggested setup in OOM: The aggregated logging framework ( Logging Architecture), uses Filebeat to send logs from each pod to the ELK stack where they are processed and stored.

0 kommentar(er)

0 kommentar(er)